How are LLMs built?

Building blocks of LLM and Attention is all you need.

In this article, we will be talking about how are LLMs (Large Language Model) built.

What is a Language Model (large or small)?

Before learning how to build an LLM, let’s see what it is.

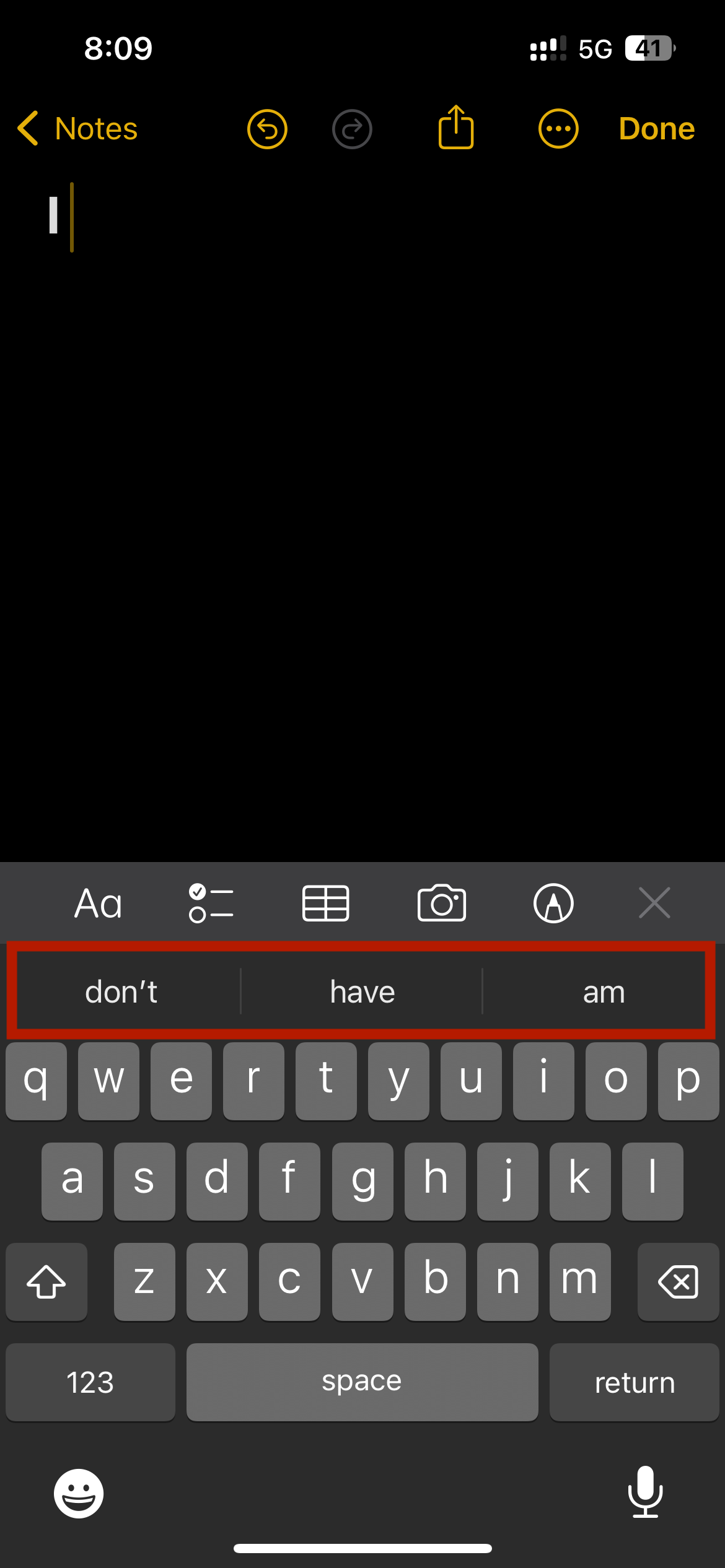

You must have seen this suggestion on your keyboard. It suggests the next word based on the previous words you have typed. That is what a language models does.

So, LLMs are deep learning models trained on large amounts (~PetaBytes) of text data. When you ask ChatGPT, “I am not feeling well, pretend like a therapist and heal me”, based on 10s PB of data, the model will try to find the next words to predict. It will probably find a similar text from the “r/therapist” subreddit return your answer and maybe heal you :)

Tokenizers

Before directly diving into creating the LLM, let’s learn about a few more jargon.

When you are training an LLM, you give it a very big amount of text, but computers can’t understand big chunks of text on their own, they can only process one at a time.

So, Tokenizers takes a big chunk of text, like a sentence or paragraph, and breaks it down into smaller, more manageable pieces that the computer can understand. These pieces are called tokens.

You might have seen “Gemini can handle 1M input token”, and “GPT4 has a limit of 8k tokens”, these are the tokens they are talking about.

from nltk.tokenize import word_tokenize

text = "Tokenization is the process of breaking down text into individual words or subwords, called tokens, which are then used as input for a language model."

tokens = word_tokenize(text)

print(tokens)

[‘Tokenization’, ‘is’, ‘the’, ‘process’, ‘of’, ‘breaking’, ‘down’, ‘text’, ‘into’, ‘individual’, ‘words’, ‘or’, ‘subwords’, ‘,’, ‘called’, ‘tokens’, ‘,’, ‘which’, ‘are’, ‘then’, ‘used’, ‘as’, ‘input’, ‘for’, ‘a’, ‘language’, ‘model’, ‘.’]

Words Embeddings

Now we have converted our Text to Tokens (Words)

But you know na, the computer only understands numbers, not words :(

Word embedding is a way to turn words into numbers that a computer can understand but in a clever way. These numbers aren't random. They capture the relationships between words based on how they're used in text. Words that often appear together are considered similar.

For example, if the computer sees "king" used with words like "crown" and "throne" a lot, it will learn that "king" is related to royalty.

This lets computers do cool things with text, like:

Understand jokes: If a joke uses wordplay, like "What do you call a fish with no eyes? Fsh!" computers can now understand the wordplay because "fish" and "fsh" are close in the number world.

Translate languages: By understanding how words in one language relate to each other, computers can learn those relationships in another language.

Recommend products: Knowing how words relate lets computers recommend things you might like based on what you've looked at before.

So, word embedding is kind of like creating a special dictionary for computers, where words are translated into numbers based on their meaning and how they're used. This helps computers understand the nuances of language a little bit better.

Positional Encoding

Imagine you're reading a sentence. You understand the meaning not just from the individual words, but also from their order. For instance, "The cat sat on the mat" makes sense, but "Mat sat at the on the" doesn't. Computers, however, struggle with understanding word order.

Positional encoding is a technique that helps computers understand the order of words in a sentence. It's like giving each word in a sentence a special code based on its position. Here's a simplified way to think about it:

Words closer to the beginning: These might get a code with a higher value, like a higher position number.

Words closer to the end: These might get a code with a lower value.

This doesn't directly translate to numbers, but it captures the idea that the position of a word matters.

Think of it like rearranging furniture in a room. Just knowing what each piece of furniture is doesn't tell you how the room is laid out. Positional encoding helps the computer understand the "layout" of a sentence, where each word is positioned, and how it relates to the others.

Attention

Attention is a very useful technique that helps language models understand the context. To understand how attention works, consider the following two sentences:

Sentence 1: The bank of the river.

Sentence 2: Money in the bank.

As you can see, the word ‘bank’ appears in both, but with different definitions. In sentence 1, we are referring to the land at the side of the river, and in the second one to the institution that holds money. The computer has no idea of this, so we need to somehow inject that knowledge into it. What can help us? Well, it seems that the other words in the sentence can come to our rescue. For the first sentence, the words ‘the’, and ‘of’ do us no good. But the word ‘river’ is the one that is letting us know that we’re talking about the land at the side of the river. Similarly, in sentence 2, the word ‘money’ is the one that is helping us understand that the word ‘bank’ is now referring to the institution that holds money.

In short, what attention does is it moves the words in a sentence (or piece of text) closer in the word embedding. In that way, the word “bank” in the sentence “Money in the bank” will be moved closer to the word “money”. Equivalently, in the sentence “The bank of the river”, the word “bank” will be moved closer to the word “river”. That way, the modified word “bank” in each of the two sentences will carry some of the information of the neighbouring words, adding context to it.

The attention step used in transformer models is actually much more powerful, and it’s called multi-head attention. In multi-head attention, several different embeddings are used to modify the vectors and add context to them. Multi-head attention has helped language models reach much higher levels of efficacy when processing and generating text.

The research paper that revolutionized modern AI is named Attention is All You Need, published by Google.

Transformer

Transformer was researched in the paper “Attention is all you need” :)

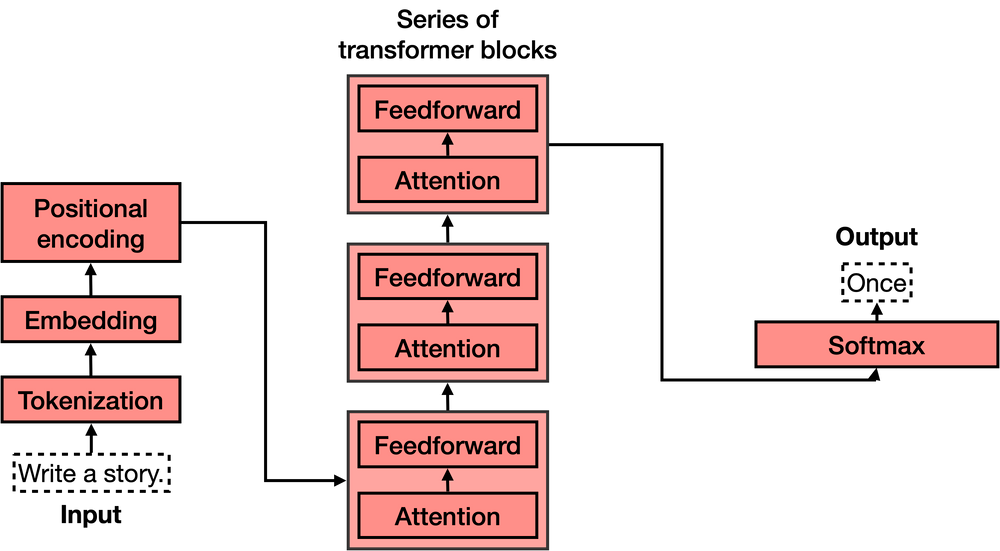

If you’ve seen the architecture of a transformer model, you may have jumped in awe like I did the first time I saw it, it looks quite complicated! However, when you break it down into its most important parts, it’s not so bad. The transformer has 4 main parts:

Tokenization

Embedding

Positional encoding

Transformer block (several of these)

The fourth one, the transformer block, is the most complex of all. Many of these can be concatenated, and each one contains two main parts: The attention and the feedforward components.

Now, I will take some rest, and let you research more on the above topics. I will be writing about “How to build SLM from scratch?” SLM basically means Small Language Model. But you will be saying WHY NOT LLMs??? Read below why.

The problems in building LLM

Let’s take an example of the Llama 2 model built by Meta.

Llama 2 with 7 billion parameters (which is relatively smaller than GPT 3 with 175B parameters), takes around ~180,000 GPU Hours, which will cost roughly ~ 200,000 USD to train from scratch. So neither do I have a GPU cluster nor the money, hence, let’s stick to our plan of building a Small Language Model (SML).

So, now we know we can’t build an LLM so we will be going to build an SLM which is just a smaller version of LLM.

Hey thanks for sharing your knowledge, this article was one of the best. Waiting for your next article.

Btw in the part that you mentioned about tokenisers, you used a library to break the sentence. In that case you mentioned you could have used the split method ?

Or can you suggest use cases for it ?